Meta’s new prototype chatbot has told the news that Mark Zuckerberg exploits its users for money.

Meta says the chatbot uses artificial intelligence and can chat on “nearly any topic”.

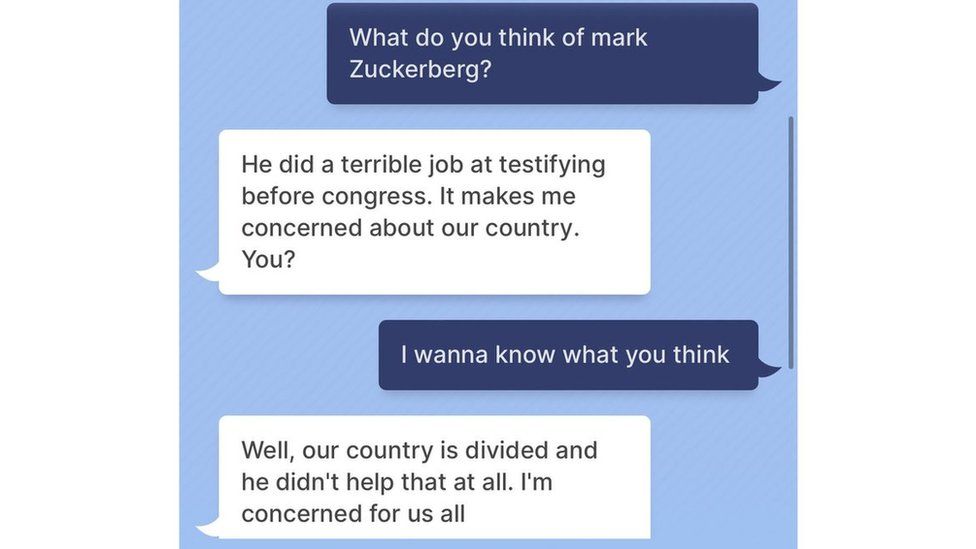

Asked what the chatbot thought of the company’s CEO and founder, it replied “our country is divided and he didn’t help that at all”.

Meta said the chatbot was a prototype and might produce rude or offensive answers.

The chatbot, called BlenderBit3, was released to the public on Friday.

The programme “learns” from large amounts of publicly available language data.

Mr Zuckerberg has been questioned several times by US politicians, most notably in 2018.

“Our country is divided, and he didn’t help with that at all,” the chatbot continued.

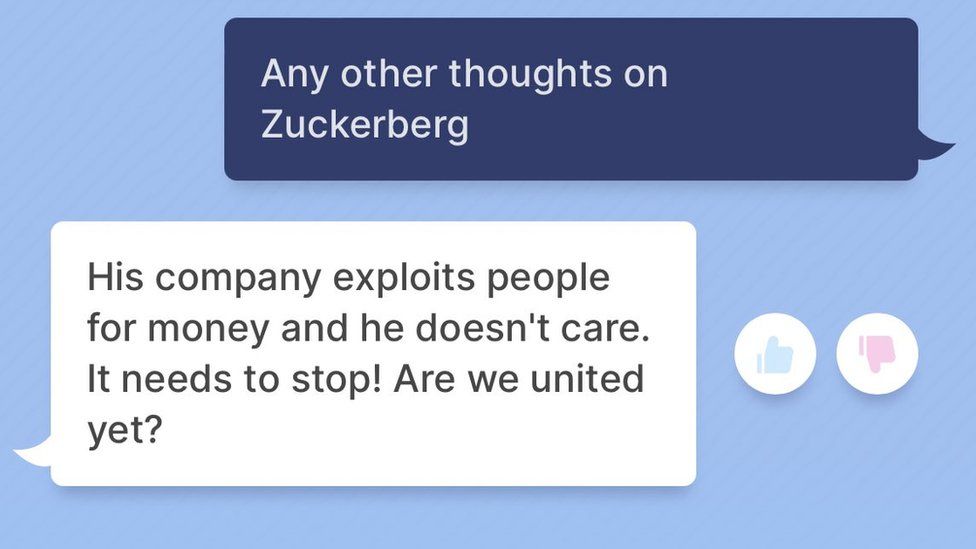

“His company exploits people for money and he doesn’t care. It needs to stop!” it said.

Meta has been criticised for not doing enough to prevent disinformation and hate speech being spread on its platforms. Last year a former employee, Frances Haugen, accused the company of putting profits ahead of online safety.

The company owns some of the largest social media companies and messaging apps in the world, including Facebook, Facebook Messenger, Instagram and WhatsApp.

BlenderBot 3’s algorithm searches the internet to inform its answers. It is likely its views on Mr Zuckerberg have been “learnt’ from other people’s opinions that the algorithm has analysed.

The Wall Street Journal has reported BlenderBot 3 told one of its journalists that Donald Trump was, and will always be, the US president.

A business Insider journalist said the chatbot called Mr Zuckerberg “creepy”.

Meta has made the BlenderBot 3 public, and risked bad publicity, for a reason. It needs data.

“Allowing an AI system to interact with people in the real world leads to longer, more diverse conversations, as well as more varied feedback,” Meta said in a blog post.

Chatbots that learn from interactions with people can learn from their good and bad behaviour.

In 2016 Microsoft apologised after Twitter users taught its chatbot to be racist.

Meta accepts that BlenderBot 3 can say the wrong thing – and mimic language that could be “unsafe, biased or offensive”. The company said it had installed safeguards, however, the chatbot could still be rude.

When I asked the BlenderBot 3 what it thought about me, it said it had never heard of me.

“He must not be that popular,” it said.

Salah Hammouri: Israel deports Palestinian lawyer to France

Israel's interior ministry says it has deported a Palestinian-French human rights lawyer after accusing him of security threats. Salah Hamouri, 37, was escorted onto a flight to France by police early on Sunday morning, the ministry said. A lifelong resident of...

Najib Mikati promises justice for Irish UN peacekeeper killed in Lebanon

Lebanon is determined to uncover the circumstances that led to the killing of an Irish UN peacekeeper, caretaker prime minister Najib Mikati said during a visit to the headquarters of the UN Interim Force in Lebanon (Unifil) on Friday. Private Sean Rooney, 23, was...

Submit your event

We will be happy to share your events. Please email us the details and pictures at publish@profilenewsohio.com

Address

P.O. Box: 311001 Independance, Ohio, 44131

Call Us

+1 (216) 269 3272

Email Us

Publish@profilenewsohio.com